In the past, I have written several posts on the topic of word finding difficulties (HERE and HERE) as well as narrative assessments (HERE and HERE) of school-aged children. Today I am combining these posts together by offering suggestions on how SLPs can identify word finding difficulties in narrative samples of school-aged children. Continue reading Identifying Word Finding Deficits in Narrative Retelling of School-Aged Children

In the past, I have written several posts on the topic of word finding difficulties (HERE and HERE) as well as narrative assessments (HERE and HERE) of school-aged children. Today I am combining these posts together by offering suggestions on how SLPs can identify word finding difficulties in narrative samples of school-aged children. Continue reading Identifying Word Finding Deficits in Narrative Retelling of School-Aged Children

Category: Assessment

Analyzing Narratives of School-Aged Children

As mentioned previously, for elicitation purposes, I frequently use the books recommended by the SALT Software website, which include: ‘Frog Where Are You?’ by Mercer Mayer, ‘Pookins Gets Her Way‘ and ‘A Porcupine Named Fluffy‘ by Helen Lester, as well as ‘Dr. DeSoto‘ by William Steig. Continue reading Analyzing Narratives of School-Aged Children

Social Communication and Describing Skills: What is the Connection?

When it comes to the identification of social communication deficits, SLPs are in a perpetual search for quick and reliable strategies that can assist us in our quest of valid and reliable confirmation of social communication difficulties. The problem is that in some situations, it is not always functional to conduct a standardized assessment, while in others a standardized assessment may have limited value (e.g., if the test doesn’t assess or limitedly assesses social communication abilities).

So what type of tasks are sensitive to social communication deficits? Quite a few, actually. For starters, various types of narratives are quite sensitive to social communication impairment. From fictional to expository, narrative analysis can go a long way in determining whether the student presents with appropriate sequencing skills, adequate working memory, age-level grammar, and syntax, adequate vocabulary, pragmatics, perspective taking abilities, critical thinking skills, etc. But what if one doesn’t have the time to record and transcribe a narrative retelling, what then? Actually, a modified version of a narrative assessment task can still reveal a great deal about the student’s social communication abilities.

For the purpose of this particular task, I like to use photos depicting complex social communication scenarios. Then I simply ask the student: “Please describe what is happening in this photo.” Wait a second you may say: “That’s it? This is way too simple! You can’t possibly determine if someone has social communication deficits based on a single photo description!”

For the purpose of this particular task, I like to use photos depicting complex social communication scenarios. Then I simply ask the student: “Please describe what is happening in this photo.” Wait a second you may say: “That’s it? This is way too simple! You can’t possibly determine if someone has social communication deficits based on a single photo description!”

I beg to differ. Here’s an interesting fact about students with social communication deficits. Even the ones with FSIQ in the superior range of functioning (>130) with exceptionally large lexicons, still present with massive deficits when it comes to providing coherent and cohesive descriptions and summaries.

Here are just a few reasons why this happens. Research indicates that students with social communication difficulties present with Gestalt Processing deficits or difficulty “seeing/grasping the big picture”(Happe & Frith, 2006). Rather than focusing on the main idea, they tend to focus on isolated details due to which they have a tendency to provide an incomplete/partial information about visual scenes, books, passages, stories, or movies. As such, despite possessing an impressive lexicon, such students may say about the above picture: “She is drawing” or “They are outside” and omit a number of relevant to the picture details.

Research also confirms that another difficulty that students with impaired social communication abilities present with is assuming perspectives of others (e.g., relating to others, understanding/interpreting their beliefs, thoughts, feelings, etc.) (Kaland et al, 2007). As such they may miss relevant visual clues pertaining to how the boy and girl are feeling, what they are thinking, etc.

Students with social communication deficits also present with anaphoric referencing difficulties. Rather than referring to individuals in books and pictures by name or gender, they may nonspecifically utilize personal pronouns ‘he’, ‘she’ or ‘they’ to refer to them. Consequently, they may describe the individuals in the above photo as follows: “She is drawing and the boy is looking”; or “They are sitting at the table outside.”

Finally, students with social communication deficits may produce poorly constructed run-on (exceedingly verbose) or fragmented utterances (very brief) lacking in coherence and cohesion to describe the main idea in the above scenario (Frith, 1989).

Of course, by now many of you want to know regarding what constitutes as pragmatically appropriate descriptions for students of varying ages. For that, you can visit a thread in the SLPs for Evidence-Based Practice Group on Facebook entitled: GIANT POST WITH FREE LINKS AND RESOURCES ON THE TOPIC OF TYPICAL SPEECH AND LANGUAGE MILESTONES OF CHILDREN 0-21 YEARS OF AGE to locate the relevant milestones by age.

Interested in seeing these assessment strategies in action? Download a FREEBIE HERE and see for yourselves.

References:

- Frith, U., (1989). Autism: Explaining the Enigma. Blackwell, Oxford.

- Happe, F. & Frith, U. (2006). The weak coherence account: Detail-focused cognitive style in Autism Spectrum Disorders. Journal of Autism and Developmental Disorders, 36 (1), 5-25.

- Kaland, N., Callesen, K., Moller-Nielsen, A., Mortensen, E. L., & Smith, L. (2007). Performance of children and adolescents with Asperger Syndrome or High-functioning Autism on advanced theory of mind tasks. Journal of Autism and Developmental Disorders. 38, 1112-1123.

Clinical Assessment of Elementary-Aged Students Writing Abilities : Suggestions for SLPs

Recently I wrote a blog post regarding how SLPs can qualitatively assess writing abilities of adolescent learners. Today due to popular demand, I am offering suggestions regarding how SLPs can assess writing abilities of early-elementary-aged students with suspected learning and literacy deficits. For the purpose of this post, I will focus on assessing writing of second-grade students since by second-grade students are expected to begin producing simple written compositions several sentences in length (CCSS).

Recently I wrote a blog post regarding how SLPs can qualitatively assess writing abilities of adolescent learners. Today due to popular demand, I am offering suggestions regarding how SLPs can assess writing abilities of early-elementary-aged students with suspected learning and literacy deficits. For the purpose of this post, I will focus on assessing writing of second-grade students since by second-grade students are expected to begin producing simple written compositions several sentences in length (CCSS).

So how can we analyze the writing samples of young learners? For starters, it is important to know what the typical writing expectations look like for 2nd-grade students. Here’s is a sampling of typical expectations for second graders as per several sources (e.g., CCSS, Reading Rockets, Time4Writing, etc.)

- With respect to penmanship, students are expected to write legibly.

- With respect to grammar, students are expected to identify and correctly use basic parts of speech such as nouns and verbs.

- With respect to sentence structure students are expected to distinguish between complete and incomplete sentences as well as use correct subject/verb/noun/pronoun agreements and correct verb tenses in simple and compound sentences.

- With respect to punctuation, students are expected to use periods correctly at the end of sentences. They are expected to use commas in sentences with dates and items in a series.

- With respect to capitalization, students are expected to capitalize proper nouns, words at the beginning of sentences, letter salutations, months and days of the week, as well as titles and initials of people.

- With respect to spelling, students are expected to spell CVC (e.g., tap), CVCe (e.g., tape), as well as CCVC words (e.g., trap), high frequency regular and irregular spelled words (e.g., were, said, why, etc), basic inflectional endings (e.g., –ed, -ing, -s, etc), as well as to recognize select orthographic patterns and rules (e.g., when to spell /k/ or /c/ in CVC and CVCe word, how to drop one vowel (e.g., /y/) and replace it with another /i/, etc.)

Now let’s apply the above expectations to a writing sample of a 2nd-grade student whose parents are concerned with her writing abilities in addition to other language and learning concerns. This student was provided with a typical second grade writing prompt: “Imagine you are going to the North Pole. How are you going to get there? What would you bring with you? You have 15 minutes to write your story. Please make your story at least 4 sentences long.”

Now let’s apply the above expectations to a writing sample of a 2nd-grade student whose parents are concerned with her writing abilities in addition to other language and learning concerns. This student was provided with a typical second grade writing prompt: “Imagine you are going to the North Pole. How are you going to get there? What would you bring with you? You have 15 minutes to write your story. Please make your story at least 4 sentences long.”

The following is the transcribed story produced by her. “I am going in the north pole. I am going to bring food my mom toy’s stoft (stuffed) animals. I am so icsited (excited). So we are going in a box. We are going to go done (down) the stars (stairs) with the box and wate (wait) intile (until) the male (mail) is hear (here).”

Analysis: The student’s written composition content (thought formulation and elaboration) was judged to be impaired for her grade level. According to the CCSS, 2d grade students are expected to ‘”write narratives in which recount a well-elaborated event or short sequence of events, include details to describe actions, thoughts, and feelings, use temporal words to signal event order, and provide a sense of closure.” However, the above narrative sample by no means satisfies this requirement. The student’s writing was excessively misspelled, as well as lacked organization and clarity of message. While portions of her narrative appropriately addressed the question with respect to whom and what she was going to bring on her travels, her narrative quickly lost coherence by her 4th sentence, when she wrote: “So we are going in a box” with further elaborations regarding what she meant by that sentence. Second-grade students are expected to engage in basic editing and revision of their work. This student only took four minutes to compose the above-written sample and as such had more than adequate amount of time to review the question as well as her response for spelling and punctuation errors as well as for clarity of message, which she did not do. Furthermore, despite being provided with a written prompt which contained the correct capitalization of a place: “North Pole”, the student was not observed to capitalize it in her writing, which indicates ongoing executive function difficulties with the respect to proofreading and attention to details.

Impressions: Clinical assessment of the student’s writing revealed difficulties in the areas of spelling, capitalization, message clarity as well as lack of basic proofreading and editing, which require therapeutic intervention.

Now let us select a few writing goals for this student.

Now let us select a few writing goals for this student.

Long-Term Goals: Student will improve her writing abilities for academic purposes.

- Short-Term Goals

- Student will label parts of speech (e.g., adjectives, adverbs, prepositions, etc.) in compound sentences.

- Student will use declarative and interrogative sentence types for story composition purposes

- Student will correctly use past, present, and future verb tenses during writing tasks.

- Student will use basic punctuation at the sentence level (e.g., commas, periods, and apostrophes in singular possessives, etc.).

- Student will use basic capitalization at the sentence level (e.g., capitalize proper nouns, words at the beginning of sentences, months and days of the week, etc.).

- Student will proofread her work via reading aloud for clarity

- Student will edit her work for correct grammar, punctuation, and capitalization

Notice the above does not contain any spelling goals. That is because given the complexity of her spelling profile I prefer to tackle her spelling needs in a separate post, which discusses spelling development, assessment, as well as intervention recommendations for students with spelling deficits.

There you have it. A quick and easy qualitative writing assessment for elementary-aged students which can help determine the extent of the student’s writing difficulties as well as establish a few writing remediation targets for intervention purposes.

Using a different type of writing assessment with your students? Please share the details below so we can all benefit from each others knowledge of assessment strategies.

Help, My Student has a Huge Score Discrepancy Between Tests and I Don’t Know Why?

Here’s a familiar scenario to many SLPs. You’ve administered several standardized language tests to your student (e.g., CELF-5 & TILLS). You expected to see roughly similar scores across tests. Much to your surprise, you find that while your student attained somewhat average scores on one assessment, s/he had completely bombed the second assessment, and you have no idea why that happened.

Here’s a familiar scenario to many SLPs. You’ve administered several standardized language tests to your student (e.g., CELF-5 & TILLS). You expected to see roughly similar scores across tests. Much to your surprise, you find that while your student attained somewhat average scores on one assessment, s/he had completely bombed the second assessment, and you have no idea why that happened.

So you go on social media and start crowdsourcing for information from a variety of SLPs located in a variety of states and countries in order to figure out what has happened and what you should do about this. Of course, the problem in such situations is that while some responses will be spot on, many will be utterly inappropriate. Luckily, the answer lies much closer than you think, in the actual technical manual of the administered tests.

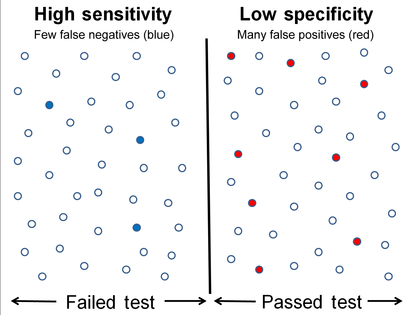

So what is responsible for such as drastic discrepancy? A few things actually. For starters, unless both tests were co-normed (used the same sample of test takers) be prepared to see disparate scores due to the ability levels of children in the normative groups of each test. Another important factor involved in the score discrepancy is how accurately does the test differentiate disordered children from typical functioning ones.

Let’s compare two actual language tests to learn more. For the purpose of this exercise let us select The Clinical Evaluation of Language Fundamentals-5 (CELF-5) and the Test of Integrated Language and Literacy (TILLS). The former is a very familiar entity to numerous SLPs, while the latter is just coming into its own, having been released in the market only several years ago.

Both tests share a number of similarities. Both were created to assess the language abilities of children and adolescents with suspected language disorders. Both assess aspects of language and literacy (albeit not to the same degree nor with the same level of thoroughness). Both can be used for language disorder classification purposes, or can they?

Actually, my last statement is rather debatable. A careful perusal of the CELF – 5 reveals that its normative sample of 3000 children included a whopping 23% of children with language-related disabilities. In fact, the folks from the Leaders Project did such an excellent and thorough job reviewing its psychometric properties rather than repeating that information, the readers can simply click here to review the limitations of the CELF – 5 straight on the Leaders Project website. Furthermore, even the CELF – 5 developers themselves have stated that: “Based on CELF-5 sensitivity and specificity values, the optimal cut score to achieve the best balance is -1.33 (standard score of 80). Using a standard score of 80 as a cut score yields sensitivity and specificity values of .97. “

Actually, my last statement is rather debatable. A careful perusal of the CELF – 5 reveals that its normative sample of 3000 children included a whopping 23% of children with language-related disabilities. In fact, the folks from the Leaders Project did such an excellent and thorough job reviewing its psychometric properties rather than repeating that information, the readers can simply click here to review the limitations of the CELF – 5 straight on the Leaders Project website. Furthermore, even the CELF – 5 developers themselves have stated that: “Based on CELF-5 sensitivity and specificity values, the optimal cut score to achieve the best balance is -1.33 (standard score of 80). Using a standard score of 80 as a cut score yields sensitivity and specificity values of .97. “

In other words, obtaining a standard score of 80 on the CELF – 5 indicates that a child presents with a language disorder. Of course, as many SLPs already know, the eligibility criteria in the schools requires language scores far below that in order for the student to qualify to receive language therapy services.

In fact, the test’s authors are fully aware of that and acknowledge that in the same document. “Keep in mind that students who have language deficits may not obtain scores that qualify him or her for placement based on the program’s criteria for eligibility. You’ll need to plan how to address the student’s needs within the framework established by your program.”

But here is another issue – the CELF-5 sensitivity group included only a very small number of: “67 children ranging from 5;0 to 15;11”, whose only requirement was to score 1.5SDs < mean “on any standardized language test”. As the Leaders Project reviewers point out: “This means that the 67 children in the sensitivity group could all have had severe disabilities. They might have multiple disabilities in addition to severe language disorders including severe intellectual disabilities or Autism Spectrum Disorder making it easy for a language disorder test to identify this group as having language disorders with extremely high accuracy. ” (pgs. 7-8)

But here is another issue – the CELF-5 sensitivity group included only a very small number of: “67 children ranging from 5;0 to 15;11”, whose only requirement was to score 1.5SDs < mean “on any standardized language test”. As the Leaders Project reviewers point out: “This means that the 67 children in the sensitivity group could all have had severe disabilities. They might have multiple disabilities in addition to severe language disorders including severe intellectual disabilities or Autism Spectrum Disorder making it easy for a language disorder test to identify this group as having language disorders with extremely high accuracy. ” (pgs. 7-8)

Of course, this begs the question, why would anyone continue to administer any test to students, if its administration A. Does not guarantee disorder identification B. Will not make the student eligible for language therapy despite demonstrated need?

The problem is that even though SLPs are mandated to use a variety of quantitative clinical observations and procedures in order to reliably qualify students for services, standardized tests still carry more value then they should. Consequently, it is important for SLPs to select the right test to make their job easier.

The TILLS is a far less known assessment than the CELF-5 yet in the few years it has been out on the market it really made its presence felt by being a solid assessment tool due to its valid and reliable psychometric properties. Again, the venerable Dr. Carol Westby had already done such an excellent job reviewing its psychometric properties that I will refer the readers to her review here, rather than repeating this information as it will not add anything new on this topic. The upshot of her review as follows: “The TILLS does not include children and adolescents with language/literacy impairments (LLIs) in the norming sample. Since the 1990s, nearly all language assessments have included children with LLIs in the norming sample. Doing so lowers overall scores, making it more difficult to use the assessment to identify students with LLIs. (pg. 11)”

The TILLS is a far less known assessment than the CELF-5 yet in the few years it has been out on the market it really made its presence felt by being a solid assessment tool due to its valid and reliable psychometric properties. Again, the venerable Dr. Carol Westby had already done such an excellent job reviewing its psychometric properties that I will refer the readers to her review here, rather than repeating this information as it will not add anything new on this topic. The upshot of her review as follows: “The TILLS does not include children and adolescents with language/literacy impairments (LLIs) in the norming sample. Since the 1990s, nearly all language assessments have included children with LLIs in the norming sample. Doing so lowers overall scores, making it more difficult to use the assessment to identify students with LLIs. (pg. 11)”

Now, here many proponents of inclusion of children with language disorders in the normative sample will make a variation of the following claim: “You CANNOT diagnose a language impairment if children with language impairment were not included in the normative sample of that assessment!” Here’s a major problem with such assertion. When a child is referred for a language assessment, we really have no way of knowing if this child has a language impairment until we actually finish testing them. We are in fact attempting to confirm or refute this fact, hopefully via the use of reliable and valid testing. However, if the normative sample includes many children with language and learning difficulties, this significantly affects the accuracy of our identification, since we are interested in comparing this child’s results to typically developing children and not the disordered ones, in order to learn if the child has a disorder in the first place. As per Peña, Spaulding and Plante (2006), “the inclusion of children with disabilities may be at odds with the goal of classification, typically the primary function of the speech pathologist’s assessment. In fact, by including such children in the normative sample, we may be “shooting ourselves in the foot” in terms of testing for the purpose of identifying disorders.”(p. 248)

Then there’s a variation of this assertion, which I have seen in several Facebook groups: “Children with language disorders score at the low end of normal distribution“. Once again such assertion is incorrect since Spaulding, Plante & Farinella (2006) have actually shown that on average, these kids will score at least 1.28 SDs below the mean, which is not the low average range of normal distribution by any means. As per authors: “Specific data supporting the application of “low score” criteria for the identification of language impairment is not supported by the majority of current commercially available tests. However, alternate sources of data (sensitivity and specificity rates) that support accurate identification are available for a subset of the available tests.” (p. 61)

Then there’s a variation of this assertion, which I have seen in several Facebook groups: “Children with language disorders score at the low end of normal distribution“. Once again such assertion is incorrect since Spaulding, Plante & Farinella (2006) have actually shown that on average, these kids will score at least 1.28 SDs below the mean, which is not the low average range of normal distribution by any means. As per authors: “Specific data supporting the application of “low score” criteria for the identification of language impairment is not supported by the majority of current commercially available tests. However, alternate sources of data (sensitivity and specificity rates) that support accurate identification are available for a subset of the available tests.” (p. 61)

Now, let us get back to your child in question, who performed so differently on both of the administered tests. Given his clinically observed difficulties, you fully expected your testing to confirm it. But you are now more confused than before. Don’t be! Search the technical manual for information on the particular test’s sensitivity and specificity to look up the numbers. Vance and Plante (1994) put forth the following criteria for accurate identification of a disorder (discriminant accuracy): “90% should be considered good discriminant accuracy; 80% to 89% should be considered fair. Below 80%, misidentifications occur at unacceptably high rates” and leading to “serious social consequences” of misidentified children. (p. 21)

Review the sensitivity and specificity of your test/s, take a look at the normative samples, see if anything unusual jumps out at you, which leads you to believe that the administered test may have some issues with assessing what it purports to assess. Then, after supplementing your standardized testing results with good quality clinical data (e.g., narrative samples, dynamic assessment tasks, etc.), consider creating a solidly referenced purchasing pitch to your administration to invest in more valid and reliable standardized tests.

Hope you find this information helpful in your quest to better serve the clients on your caseload. If you are interested in learning more regarding evidence-based assessment practices as well as psychometric properties of various standardized speech-language tests visit the SLPs for Evidence-Based Practice group on Facebook learn more.

References:

- Peña ED, Spaulding TJ, and Plante E. ( 2006) The composition of normative groups and diagnostic decision-making: Shooting ourselves in the foot. American Journal of Speech-Language Pathology 15: 247–54.

- Spaulding, T. J., Plante, E., & Farinella, K. A. (2006). Eligibility criteria for language impairment: Is the low end of normal always appropriate? Language, Speech, and Hearing Services in Schools, 37, 61-72.

- Vance, R., & Plante, E. (1994). Selection of preschool language tests: A data-based approach. Language, Speech, and Hearing Services in Schools, 25, 15-24.

Components of Qualitative Writing Assessments: What Exactly are We Trying to Measure?

Writing! The one assessment area that challenges many SLPs on daily basis! If one polls 10 SLPs on the topic of writing, one will get 10 completely different responses ranging from agreement and rejection to the diverse opinions regarding what should actually be assessed and how exactly it should be accomplished.

Consequently, today I wanted to focus on the basics involved in the assessment of adolescent writing. Why adolescents you may ask? Well, frankly because many SLPs (myself included) are far more likely to assess the writing abilities of adolescents rather than elementary-aged children.

Often, when the students are younger and their literacy abilities are weaker, the SLPs may not get to the assessment of writing abilities due to the students presenting with so many other deficits which require precedence intervention-wise. However, as the students get older and the academic requirements increase exponentially, SLPs may be more frequently asked to assess the students’ writing abilities because difficulties in this area significantly affect them in a variety of classes on a variety of subjects.

So what can we assess when it comes to writing? In the words of Helen Lester’s character ‘Pookins’: “Lots!” There are various types of writing that can be assessed, the most common of which include: expository, persuasive, and fictional. Each of these can be used for assessment purposes in a variety of ways.

To illustrate, if we chose to analyze the student’s written production of fictional narratives then we may broadly choose to analyze the following aspects of the student’s writing: contextual conventions and writing composition.

The former looks at such writing aspects as the use of correct spelling, punctuation, and capitalization, paragraph formation, etc.

The former looks at such writing aspects as the use of correct spelling, punctuation, and capitalization, paragraph formation, etc.

The latter looks at the nitty-gritty elements involved in plot development. These include effective use of literate vocabulary, plotline twists, character development, use of dialogue, etc.

Perhaps we want to analyze the student’s persuasive writing abilities. After all, high school students are expected to utilize this type of writing frequently for essay writing purposes. Actually, persuasive writing is a complex genre which is particularly difficult for students with language-learning difficulties who struggle to produce essays that are clear, logical, convincing, appropriately sequenced, and take into consideration opposing points of view. It is exactly for that reason that persuasive writing tasks are perfect for assessment purposes.

But what exactly are we looking for analysis wise? What should a typical 15 year old’s persuasive essays contain?

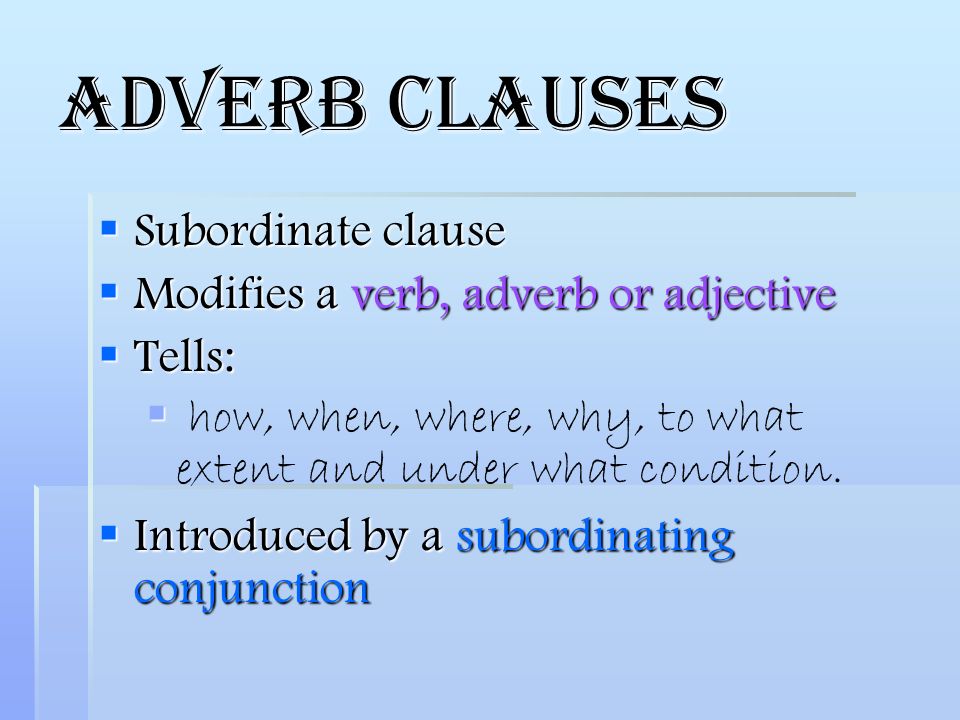

With respect to syntax, a typical student that age is expected to write complex sentences possessing nominal, adverbial, as well as relative clauses.

With respect to syntax, a typical student that age is expected to write complex sentences possessing nominal, adverbial, as well as relative clauses.

With the respect to semantics, effective persuasive essays require the use of literate vocabulary words of low frequency such as later developing connectors (e.g., first of all, next, for this reason, on the other hand, consequently, finally, in conclusion) as well as metalinguistic and metacognitive verbs (“metaverbs”) that refer to acts of speaking (e.g., assert, concede, predict, argue, imply) and thinking (e.g., hypothesize, remember, doubt, assume, infer).

With respect to pragmatics, as students mature, their sensitivity to the perspectives of others improves, as a result, their persuasive essays increase in length (i.e., total number of words produced) and they are able to offer a greater number of different reasons to support their own opinions (Nippold, Ward-Lonergan, & Fanning, 2005).

Now let’s apply our knowledge by analyzing a writing sample of a 15-year-old with suspected literacy deficits. Below 10th-grade student was provided with a written prompt first described in the Nippold, et al, 2005 study, entitled: “The Circus Controversy”. “People have different views on animals performing in circuses. For example, some people think it is a great idea because it provides lots of entertainment for the public. Also, it gives parents and children something to do together, and the people who train the animals can make some money. However, other people think having animals in circuses is a bad idea because the animals are often locked in small cages and are not fed well. They also believe it is cruel to force a dog, tiger, or elephant to perform certain tricks that might be dangerous. I am interested in learning what you think about this controversy, and whether or not you think circuses with trained animals should be allowed to perform for the public. I would like you to spend the next 20 minutes writing an essay. Tell me exactly what you think about the controversy. Give me lots of good reasons for your opinion. Please use your best writing style, with correct grammar and spelling. If you aren’t sure how to spell a word, just take a guess.”(Nippold, Ward-Lonergan, & Fanning, 2005)

He produced the following written sample during the allotted 20 minutes.

Analysis: This student was able to generate a short, 3-paragraph, composition containing an introduction and a body without a definitive conclusion. His persuasive essay was judged to be very immature for his grade level due to significant disorganization, limited ability to support his point of view as well as the presence of tangential information in the introduction of his composition, which was significantly compromised by many writing mechanics errors (punctuation, capitalization, as well as spelling) that further impacted the coherence and cohesiveness of his written output.

The student’s introduction began with an inventive dialogue, which was irrelevant to the body of his persuasive essay. He did have three important points relevant to the body of the essay: animal cruelty, danger to the animals, and potential for the animals to harm humans. However, he was unable to adequately develop those points into full paragraphs. The notable absence of proofreading and editing of the composition further contributed to its lack of clarity. The above coupled with a lack of a conclusion was not commensurate grade-level expectations.

Based on the above-written sample, the student’s persuasive composition content (thought formulation and elaboration) was judged to be significantly immature for his grade level and is commensurate with the abilities of a much younger student. The student’s composition contained several emerging claims that suggested a vague position. However, though the student attempted to back up his opinion and support his position (animals should not be performing in circuses), ultimately he was unable to do so in a coherent and cohesive manner.

Based on the above-written sample, the student’s persuasive composition content (thought formulation and elaboration) was judged to be significantly immature for his grade level and is commensurate with the abilities of a much younger student. The student’s composition contained several emerging claims that suggested a vague position. However, though the student attempted to back up his opinion and support his position (animals should not be performing in circuses), ultimately he was unable to do so in a coherent and cohesive manner.

Now that we know what the student’s written difficulties look like, the following goals will be applicable with respect to his writing remediation:

Long-Term Goals: Student will improve his written abilities for academic purposes.

- Short-Term Goals

- Student will appropriately utilize parts of speech (e.g., adjectives, adverbs, prepositions, etc.) in compound and complex sentences.

- Student will use a variety of sentence types for story composition purposes (e.g., declarative, interrogative, imperative, and exclamatory sentences).

- Student will correctly use past, present, and future verb tenses during writing tasks.

- Student will utilize appropriate punctuation at the sentence level (e.g., apostrophes, periods, commas, colons, quotation marks in dialogue, and apostrophes in singular possessives, etc.).

- Student will utilize appropriate capitalization at the sentence level (e.g., capitalize proper nouns, holidays, product names, titles with names, initials, geographic locations, historical periods, special events, etc.).

- Student will use prewriting techniques to generate writing ideas (e.g., list keywords, state key ideas, etc.).

- Student will determine the purpose of his writing and his intended audience in order to establish the tone of his writing as well as outline the main idea of his writing.

- Student will generate a draft in which information is organized in chronological order via use of temporal markers (e.g., “meanwhile,” “immediately”) as well as cohesive ties (e.g., ‘but’, ‘yet’, ‘so’, ‘nor’) and cause/effect transitions (e.g., “therefore,” “as a result”).

- Student will improve coherence and logical organization of his written output via the use of revision strategies (e.g., modify supporting details, use sentence variety, employ literary devices).

- Student will edit his draft for appropriate grammar, spelling, punctuation, and capitalization.

There you have it. A quick and easy qualitative writing assessment which can assist SLPs to determine the extent of the student’s writing difficulties as well as establish writing remediation targets for intervention purposes.

Using a different type of writing assessment with your students? Please share the details below so we can all benefit from each others knowledge of assessment strategies.

References:

- Nippold, M., Ward-Lonergan, J., & Fanning, J. (2005). Persuasive writing in children, adolescents, and adults: a study of syntactic, semantic, and pragmatic development. Language, Speech, and Hearing Services in Schools, 36, 125-138.

FREE Resources for Working with Russian Speaking Clients: Part III Introduction to “Dyslexia”

Given the rising interest in recent years in the role of SLPs in the treatment of reading disorders, today I wanted to share with parents and professionals several reputable FREE resources on the subject of “dyslexia” in Russian-speaking children.

Given the rising interest in recent years in the role of SLPs in the treatment of reading disorders, today I wanted to share with parents and professionals several reputable FREE resources on the subject of “dyslexia” in Russian-speaking children.

Now if you already knew that there was a dearth of resources on the topic of treating Russian speaking children with language disorders then it will not come as a complete shock to you that very few legitimate sources exist on this subject.

First up is the Report on the Russian Language for the World Dyslexia Forum 2010 by Dr. Grigorenko, the coauthor of the Dyslexia Debate. This 25-page report contains important information including Reading/Writing Acquisition of Russian in the Context of Typical and Atypical Development as well as on the state of Individuals with Dyslexia in Russia.

First up is the Report on the Russian Language for the World Dyslexia Forum 2010 by Dr. Grigorenko, the coauthor of the Dyslexia Debate. This 25-page report contains important information including Reading/Writing Acquisition of Russian in the Context of Typical and Atypical Development as well as on the state of Individuals with Dyslexia in Russia.

Next up is this delightful presentation entitled: “If John were Ivan: Would he fail in reading? Dyslexia & dysgraphia in Russian“. It is a veritable treasure trove of useful information on the topics of:

Next up is this delightful presentation entitled: “If John were Ivan: Would he fail in reading? Dyslexia & dysgraphia in Russian“. It is a veritable treasure trove of useful information on the topics of:

- The Russian language

- Literacy in Russia (Russian Federation)

- Dyslexia in Russia

- Definition

- Identification

- Policy

- Examples of good practice

- Teaching reading/language arts

• In regular schools

• In specialized settings - Encouraging children to learn

- Teaching reading/language arts

Now let us move on to the “The Role of Phonology, Morphology, and Orthography in English and Russian Spelling” which discusses that “phonology and morphology contribute more for spelling of English words while orthography and morphology contribute more to the spelling of Russian words“. It also provides clinicians with access to the stimuli from the orthographic awareness and spelling tests in both English and Russian, listed in its appendices.

Now let us move on to the “The Role of Phonology, Morphology, and Orthography in English and Russian Spelling” which discusses that “phonology and morphology contribute more for spelling of English words while orthography and morphology contribute more to the spelling of Russian words“. It also provides clinicians with access to the stimuli from the orthographic awareness and spelling tests in both English and Russian, listed in its appendices.

Finally, for parents and Russian speaking professionals, there’s an excellent article entitled, “Дислексия” in which Dr. Grigorenko comprehensively discusses the state of the field in Russian including information on its causes, rehabilitation, etc.

Related Helpful Resources:

- Анализ Нарративов У Детей С Недоразвитием Речи (Narrative Discourse Analysis in Children With Speech Underdevelopment)

- Narrative production weakness in Russian dyslexics: Linguistic or procedural limitations?

FREE Resources for Working with Russian Speaking Clients: Part II

A few years ago I wrote a blog post entitled “Working with Russian-speaking clients: implications for speech-language assessment” the aim of which was to provide some suggestions regarding assessment of bilingual Russian-American birth-school age population in order to assist SLPs with determining whether the assessed child presents with a language difference, insufficient language exposure, or a true language disorder.

Today I wanted to provide Russian speaking clinicians with a few FREE resources pertaining to the typical speech and language development of Russian speaking children 0-7 years of age.

Below materials include several FREE questionnaires regarding Russian language development (words and sentences) of children 0-3 years of age, a parent intake forms for Russian speaking clients, as well as a few relevant charts pertaining to the development of phonology, word formation, lexicon, morphology, syntax, and metalinguistics of children 0-7 years of age.

It is, however, important to note that due to the absence of research and standardized studies on this subject much of the below information still needs to be interpreted with significant caution.

Select Speech and Language Norms:

- Некоторые нормативы речевого развития детей от 18 до 36 месяцев (по материалам МакАртуровского опросника) (Number of words and sentence per age of Russian speakign children based on McArthur Bates)

- Речевой онтогенез: Развитие Речи Ребенка В Норме 0-7 years of age (based on the work of А.Н. Гвоздев) includes: Фонетика,Словообразование, Лексика, Морфолог-ия, Синтаксис, Метаязыковая деятельность (phonology, word formation, lexicon, morphology, syntax, and metalinguistics)

- Развитиe связной речи у детей 3-7 лет

a. Составление рассказа по серии сюжетных картинок

b. Пересказ текста

c. Составление описательного рассказа

Select Parent Questionnaires (McArthur Bates Adapted in Russian):

- Тест речевого и коммуникативного развития детей раннего возраста: слова и жесты (Words and Gestures)

- Тест речевого и коммуникативного развития детей раннего возраста: слова и предложения (Sentences)

- Анкета для родителей (Child Development Questionnaire for Parents)

Материал Для Родителей И Специалистов По Речевым

Нарушениям contains detailed information (27 pages) on Russian child development as well as common communication disrupting disorders

Stay tuned for more resources for Russian speaking SLPs coming shortly.

Related Resources:

- Working with Russian-speaking clients: implications for speech-language assessment

- Assessment of sound and syllable imitation in Russian speaking infants and toddlers

- Russian Articulation Screener

- Language Difference vs. Language Disorder: Assessment & Intervention Strategies for SLPs Working with Bilingual Children

- Impact of Cultural and Linguistic Variables On Speech-Language Services

It’s All Due to …Language: How Subtle Symptoms Can Cause Serious Academic Deficits

Scenario: Len is a 7-2-year-old, 2nd-grade student who struggles with reading and writing in the classroom. He is very bright and has a high average IQ, yet when he is speaking he frequently can’t get his point across to others due to excessive linguistic reformulations and word-finding difficulties. The problem is that Len passed all the typical educational and language testing with flying colors, receiving average scores across the board on various tests including the Woodcock-Johnson Fourth Edition (WJ-IV) and the Clinical Evaluation of Language Fundamentals-5 (CELF-5). Stranger still is the fact that he aced Comprehensive Test of Phonological Processing, Second Edition (CTOPP-2), with flying colors, so he is not even eligible for a “dyslexia” diagnosis. Len is clearly struggling in the classroom with coherently expressing self, telling stories, understanding what he is reading, as well as putting his thoughts on paper. His parents have compiled impressively huge folders containing examples of his struggles. Yet because of his performance on the basic standardized assessment batteries, Len does not qualify for any functional assistance in the school setting, despite being virtually functionally illiterate in second grade.

Scenario: Len is a 7-2-year-old, 2nd-grade student who struggles with reading and writing in the classroom. He is very bright and has a high average IQ, yet when he is speaking he frequently can’t get his point across to others due to excessive linguistic reformulations and word-finding difficulties. The problem is that Len passed all the typical educational and language testing with flying colors, receiving average scores across the board on various tests including the Woodcock-Johnson Fourth Edition (WJ-IV) and the Clinical Evaluation of Language Fundamentals-5 (CELF-5). Stranger still is the fact that he aced Comprehensive Test of Phonological Processing, Second Edition (CTOPP-2), with flying colors, so he is not even eligible for a “dyslexia” diagnosis. Len is clearly struggling in the classroom with coherently expressing self, telling stories, understanding what he is reading, as well as putting his thoughts on paper. His parents have compiled impressively huge folders containing examples of his struggles. Yet because of his performance on the basic standardized assessment batteries, Len does not qualify for any functional assistance in the school setting, despite being virtually functionally illiterate in second grade.

The truth is that Len is quite a familiar figure to many SLPs, who at one time or another have encountered such a student and asked for guidance regarding the appropriate accommodations and services for him on various SLP-geared social media forums. But what makes Len such an enigma, one may inquire? Surely if the child had tangible deficits, wouldn’t standardized testing at least partially reveal them?

Well, it all depends really, on what type of testing was administered to Len in the first place. A few years ago I wrote a post entitled: “What Research Shows About the Functional Relevance of Standardized Language Tests“. What researchers found is that there is a “lack of a correlation between frequency of test use and test accuracy, measured both in terms of sensitivity/specificity and mean difference scores” (Betz et al, 2012, 141). Furthermore, they also found that the most frequently used tests were the comprehensive assessments including the Clinical Evaluation of Language Fundamentals and the Preschool Language Scale as well as one-word vocabulary tests such as the Peabody Picture Vocabulary Test”. Most damaging finding was the fact that: “frequently SLPs did not follow up the comprehensive standardized testing with domain-specific assessments (critical thinking, social communication, etc.) but instead used the vocabulary testing as a second measure”.(Betz et al, 2012, 140)

In other words, many SLPs only use the tests at hand rather than the RIGHT tests aimed at identifying the student’s specific deficits. But the problem doesn’t actually stop there. Due to the variation in psychometric properties of various tests, many children with language impairment are overlooked by standardized tests by receiving scores within the average range or not receiving low enough scores to qualify for services.

Thus, “the clinical consequence is that a child who truly has a language impairment has a roughly equal chance of being correctly or incorrectly identified, depending on the test that he or she is given.” Furthermore, “even if a child is diagnosed accurately as language impaired at one point in time, future diagnoses may lead to the false perception that the child has recovered, depending on the test(s) that he or she has been given (Spaulding, Plante & Farinella, 2006, 69).”

There’s of course yet another factor affecting our hypothetical client and that is his relatively young age. This is especially evident with many educational and language testing for children in the 5-7 age group. Because the bar is set so low, concept-wise for these age-groups, many children with moderate language and literacy deficits can pass these tests with flying colors, only to be flagged by them literally two years later and be identified with deficits, far too late in the game. Coupled with the fact that many SLPs do not utilize non-standardized measures to supplement their assessments, Len is in a pretty serious predicament.

But what if there was a do-over? What could we do differently for Len to rectify this situation? For starters, we need to pay careful attention to his deficits profile in order to choose appropriate tests to evaluate his areas of needs. The above can be accomplished via a number of ways. The SLP can interview Len’s teacher and his caregiver/s in order to obtain a summary of his pressing deficits. Depending on the extent of the reported deficits the SLP can also provide them with a referral checklist to mark off the most significant areas of need.

In Len’s case, we already have a pretty good idea regarding what’s going on. We know that he passed basic language and educational testing, so in the words of Dr. Geraldine Wallach, we need to keep “peeling the onion” via the administration of more sensitive tests to tap into Len’s reported areas of deficits which include: word-retrieval, narrative production, as well as reading and writing.

For that purpose, Len is a good candidate for the administration of the Test of Integrated Language and Literacy (TILLS), which was developed to identify language and literacy disorders, has good psychometric properties, and contains subtests for assessment of relevant skills such as reading fluency, reading comprehension, phonological awareness, spelling, as well as writing in school-age children.

For that purpose, Len is a good candidate for the administration of the Test of Integrated Language and Literacy (TILLS), which was developed to identify language and literacy disorders, has good psychometric properties, and contains subtests for assessment of relevant skills such as reading fluency, reading comprehension, phonological awareness, spelling, as well as writing in school-age children.

Given Len’s reported history of narrative production deficits, Len is also a good candidate for the administration of the Social Language Development Test Elementary (SLDTE). Here’s why. Research indicates that narrative weaknesses significantly correlate with social communication deficits (Norbury, Gemmell & Paul, 2014). As such, it’s not just children with Autism Spectrum Disorders who present with impaired narrative abilities. Many children with developmental language impairment (DLD) (#devlangdis) can present with significant narrative deficits affecting their social and academic functioning, which means that their social communication abilities need to be tested to confirm/rule out presence of these difficulties.

Given Len’s reported history of narrative production deficits, Len is also a good candidate for the administration of the Social Language Development Test Elementary (SLDTE). Here’s why. Research indicates that narrative weaknesses significantly correlate with social communication deficits (Norbury, Gemmell & Paul, 2014). As such, it’s not just children with Autism Spectrum Disorders who present with impaired narrative abilities. Many children with developmental language impairment (DLD) (#devlangdis) can present with significant narrative deficits affecting their social and academic functioning, which means that their social communication abilities need to be tested to confirm/rule out presence of these difficulties.

However, standardized tests are not enough, since even the best-standardized tests have significant limitations. As such, several non-standardized assessments in the areas of narrative production, reading, and writing, may be recommended for Len to meaningfully supplement his testing.

Let’s begin with an informal narrative assessment which provides detailed information regarding microstructural and macrostructural aspects of storytelling as well as child’s thought processes and socio-emotional functioning. My nonstandardized narrative assessments are based on the book elicitation recommendations from the SALT website. For 2nd graders, I use the book by Helen Lester entitled Pookins Gets Her Way. I first read the story to the child, then cover up the words and ask the child to retell the story based on pictures. I read the story first because: “the model narrative presents the events, plot structure, and words that the narrator is to retell, which allows more reliable scoring than a generated story that can go in many directions” (Allen et al, 2012, p. 207).

As the child is retelling his story I digitally record him using the Voice Memos application on my iPhone, for a later transcription and thorough analysis. During storytelling, I only use the prompts: ‘What else can you tell me?’ and ‘Can you tell me more?’ to elicit additional information. I try not to prompt the child excessively since I am interested in cataloging all of his narrative-based deficits. After I transcribe the sample, I analyze it and make sure that I include the transcription and a detailed write-up in the body of my report, so parents and professionals can see and understand the nature of the child’s errors/weaknesses.

Now we are ready to move on to a brief nonstandardized reading assessment. For this purpose, I often use the books from the Continental Press series entitled: Reading for Comprehension, which contains books for grades 1-8. After I confirm with either the parent or the child’s teacher that the selected passage is reflective of the complexity of work presented in the classroom for his grade level, I ask the child to read the text. As the child is reading, I calculate the correct number of words he reads per minute as well as what type of errors the child is exhibiting during reading. Then I ask the child to state the main idea of the text, summarize its key points as well as define select text embedded vocabulary words and answer a few, verbally presented reading comprehension questions. After that, I provide the child with accompanying 5 multiple choice question worksheet and ask the child to complete it. I analyze my results in order to determine whether I have accurately captured the child’s reading profile.

Now we are ready to move on to a brief nonstandardized reading assessment. For this purpose, I often use the books from the Continental Press series entitled: Reading for Comprehension, which contains books for grades 1-8. After I confirm with either the parent or the child’s teacher that the selected passage is reflective of the complexity of work presented in the classroom for his grade level, I ask the child to read the text. As the child is reading, I calculate the correct number of words he reads per minute as well as what type of errors the child is exhibiting during reading. Then I ask the child to state the main idea of the text, summarize its key points as well as define select text embedded vocabulary words and answer a few, verbally presented reading comprehension questions. After that, I provide the child with accompanying 5 multiple choice question worksheet and ask the child to complete it. I analyze my results in order to determine whether I have accurately captured the child’s reading profile.

Finally, if any additional information is needed, I administer a nonstandardized writing assessment, which I base on the Common Core State Standards for 2nd grade. For this task, I provide a student with a writing prompt common for second grade and give him a period of 15-20 minutes to generate a writing sample. I then analyze the writing sample with respect to contextual conventions (punctuation, capitalization, grammar, and syntax) as well as story composition (overall coherence and cohesion of the written sample).

The above relatively short assessment battery (2 standardized tests and 3 informal assessment tasks) which takes approximately 2-2.5 hours to administer, allows me to create a comprehensive profile of the child’s language and literacy strengths and needs. It also allows me to generate targeted goals in order to begin effective and meaningful remediation of the child’s deficits.

Children like Len will, unfortunately, remain unidentified unless they are administered more sensitive tasks to better understand their subtle pattern of deficits. Consequently, to ensure that they do not fall through the cracks of our educational system due to misguided overreliance on a limited number of standardized assessments, it is very important that professionals select the right assessments, rather than the assessments at hand, in order to accurately determine the child’s areas of needs.

References:

- Allen, M, Ukrainetz, T & Carswell, A (2012) The narrative language performance of three types of at-risk first-grade readers. Language, Speech, and Hearing Services in Schools, 43(2), 205-221.

- Betz et al. (2013) Factors Influencing the Selection of Standardized Tests for the Diagnosis of Specific Language Impairment. Language, Speech, and Hearing Services in Schools, 44, 133-146.

- Hasbrouck, J. & Tindal, G. A. (2006). Oral reading fluency norms: A valuable assessment tool for reading teachers. The Reading Teacher. 59(7), 636-644.).

- Norbury, C. F., Gemmell, T., & Paul, R. (2014). Pragmatics abilities in narrative production: a cross-disorder comparison. Journal of child language, 41(03), 485-510.

- Peña, E.D., Spaulding, T.J., & Plante, E. (2006). The Composition of Normative Groups and Diagnostic Decision Making: Shooting Ourselves in the Foot. American Journal of Speech-Language Pathology, 15, 247-254.

- Spaulding, Plante & Farinella (2006) Eligibility Criteria for Language Impairment: Is the Low End of Normal Always Appropriate? Language, Speech, and Hearing Services in Schools, 37, 61-72.

- Spaulding, Szulga, & Figueria (2012) Using Norm-Referenced Tests to Determine Severity of Language Impairment in Children: Disconnect Between U.S. Policy Makers and Test Developers. Journal of Speech, Language and Hearing Research. 43, 176-190.

The Reign of the Problematic PLS-5 and the Rise of the Hyperintelligent Potato

Those of us who have administered PLS-5 ever since its release in 2011, know that the test is fraught with significant psychometric problems. Previous reviews of its poor sensitivity, specificity, validity, and reliability, have been extensively discussed HERE and HERE as well as in numerous SLP groups on Facebook.

Those of us who have administered PLS-5 ever since its release in 2011, know that the test is fraught with significant psychometric problems. Previous reviews of its poor sensitivity, specificity, validity, and reliability, have been extensively discussed HERE and HERE as well as in numerous SLP groups on Facebook.

One of the most significant issues with this test is that its normative sample included a “clinical sample of 169 children aged 2-7;11 diagnosed with a receptive or expressive language disorder”.

The problem with such inclusion is that According to Pena, Spalding, & Plante, (2006) when the purpose of a test is to identify children with language impairment, the inclusion of children with language impairment in the normative sample can reduce the accuracy of identification.

Indeed, upon its implementation, many clinicians began to note that this test significantly under-identified children with language impairments and overinflated their scores. As such, based on its presentation, due to strict district guidelines and qualification criteria, many children who would have qualified for services with the administration of the PLS-4, no longer qualified for services when administered the PLS-5.

For years, SLPs wrote to Pearson airing out their grievances regarding this test with responses ranging from irate to downright humorous as one can see from the below response form (helpfully provided by an anonymous responder).

And now it appears that Pearson is willing to listen. A few days ago, many of us who have purchased this test received the following email:  It contains the link to a survey powered by Survey Monkey, asking clinicians their feedback regarding PLS-5 administration and how it can be improved. So if you are one of those clinicians, please go ahead and provide your honest feedback regarding this test, after all, our littlest clients deserve so much better than to be under-identified by this assessment and denied services as a result of its administration.

It contains the link to a survey powered by Survey Monkey, asking clinicians their feedback regarding PLS-5 administration and how it can be improved. So if you are one of those clinicians, please go ahead and provide your honest feedback regarding this test, after all, our littlest clients deserve so much better than to be under-identified by this assessment and denied services as a result of its administration.

References:

- Peña, E.D., Spaulding, T.J., & Plante, E. (2006). The Composition of Normative Groups and Diagnostic Decision Making: Shooting Ourselves in the Foot.

- Spaulding, Plante & Farinella (2006) Eligibility Criteria for Language Impairment: Is the Low End of Normal Always Appropriate?

- Spaulding, Szulga, & Figueria (2012) Using Norm-Referenced Tests to Determine Severity of Language Impairment in Children: Disconnect Between U.S. Policy Makers and Test Developers

- Zimmerman, I. L., Steiner, V, G., & Pond, E. (2011). Preschool Language Scales- Fifth Edition (PLS-5). San Antonio, TX: Pearson.